Advanced Quality Management / Six Sigma

2018 Global Six Sigma Survey Results

In 2018, we conducted a global survey of Six Sigma practices in medical laboratories. Now it's time to share the results and reward the participants.

2018 Global Six Sigma Survey Results

Sten Westgard

September 2018

Our survey which ran from May until September of this year (2018), collected responses in Chinese, Spanish and English, and achieved more than 550 laboratory responses from more than 100 countries. Probably one of the largest surveys of Six Sigma use in medical laboratories.

We focused as close to the bench as we could get, accumulating most of our responses from supervisors and technologists, with the third most common respondent being the laboratory director. We excluded sales reps and vendors from the reponses.

More public and government hospital labs responded than private labs, with less than 20% of the respondents coming from reference or independent laboratories. Only a tiny percentage of office labs and blood banks responded.

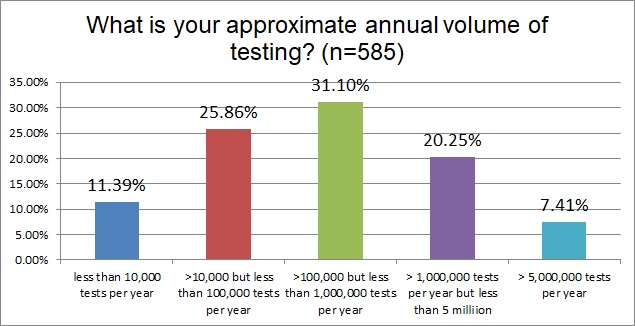

The labs that responded formed a pretty good "bell curve" around the volume of 100,000 to 1,000,00 tests per year. There were less than 10% megalabs with >5MM tests per year, and slightly more labs, 11.4%, that represented smaller volumes of less than 10,000 tests per year.

In this question, we tried to understand what regulations were the primary drivers of the laboratories. The most common response is that the local or national regulations are the determining factor on laboratory practices. Close behind it, though, is ISO 15189, confirming again that this ISO standard is emerging as the global standard for laboratory quality. CLIA, CAP, Joint Commission, impacted around 10 to 18% of the laboratories that responded. Considering that the US laboratory response in this survey was 18%, that's not a surprising finding.

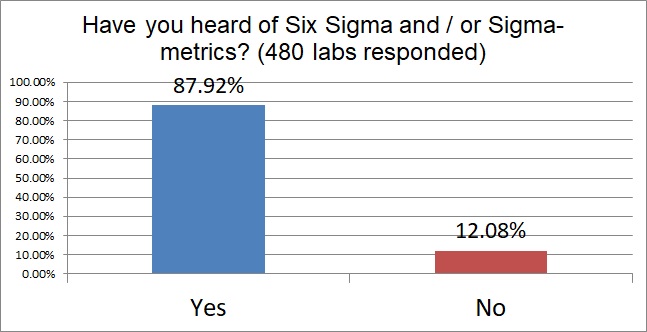

Labs responding to this survey displayed high familiarity with Six Sigma. Almost 90% of the labs responding have already heard of Six Sigma. This is a question, of course, that could be impacted by self selection bias - that is, the labs that were unfamiliar with Six Sigma are less likely to fill out a survey about a concept that would probe their ignorance.

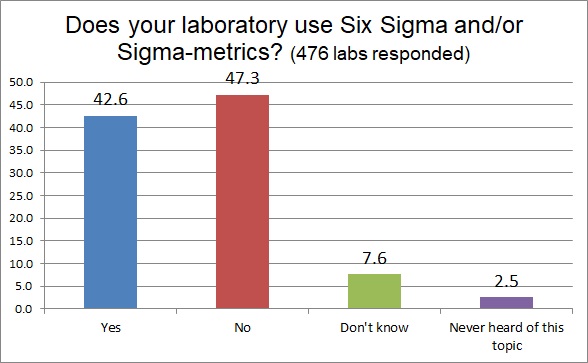

Here's where the rubber meets the road. While a large number of respondents said they were familiar with the Six Sigma concepts, far fewer of them actually USE Six Sigma in thelr laboratory. In fact, less than half. But above 40% is still quite a high proportion of labs using Six Sigma. In fact, we think that this may be a bit too high to be truly believable. We'll see in the following questions how we can bring that number back down to earth, to a more realistic percentage.

Very few of our respondents have received formal training in Six Sigma. Mostly they are hearing and learning about Six Sigma through books, studies, our own Westgard training and this website. A fair number of them have no training at all - they are just interested in it.

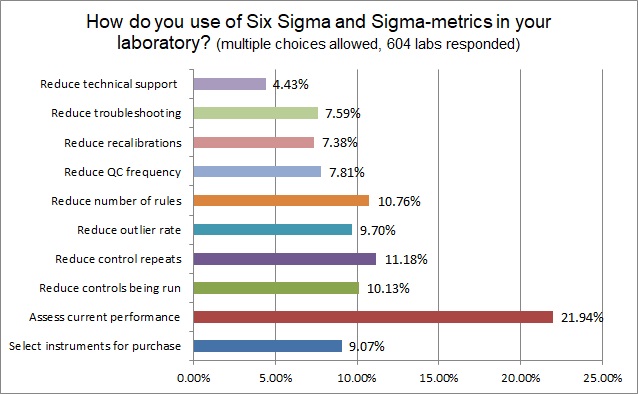

There are many possible benefits and practical outcomes of using Six Sigma, but the most common benefit that labs are utilizing Six Sigma for is to assess their current performance. About 10% of labs are using Six Sigma to reduce their outliers, repeats, numbers of controls, numbers of rules. Fewer still are using Six Sigma to reduce their recalibrations, trouble-shooting, and technical support calls. One surprising finding is that few labs are using Six Sigma to empower their selection of instruments - clearly this is one of the most useful applications - to be able to disqualify poor quality instruments from ever being allowed into the laboratory.

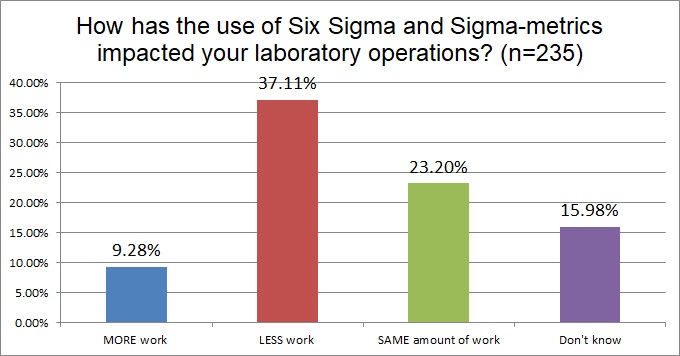

More than a third of laboratories that use Six Sigma have found that it reduces the overall amount of work they have to perform. About a quarter of laboratories say it doesn't change the amount of work they do. And less than 10% of labs say that applying Six Sigma actually increases their workload. This is a good sign - adopting Six Sigma is a way to improve productivity in the laboratory.

Also note that the response rate here has dropped, now we're looking at just 235 labs responded. This is starting to give us a better idea of how many labs actually put Six Sigma to use.

Now we begin to question labs very closely in their Six Sigma practices, and we see that the number of labs responding drops to about a quarter of the total. This is probably the more realistic estimate of how many labs are actually using Six Sigma: somewhere around 15% to 25%. When we asked them what goals they use to calculate their Sigma-metrics, the most popular goals are the CLIA goals (higher than the number of US labs who responded, proving that these goals are globally dominant. The second-most popular total allowable error goals are the 2014 "Ricos goals", followed by the Australian RCPA goals. The CAP PT goals are popular for about a quarter of labs worldwide, demonstrasting the wide reach of the CAP accreditation program (which by the way, is another extension of the CLIA goals). Through the CAP and JCI, US goals from CLIA and CAP appear to the dominant standard for calculating Sigma-metrics. Even though only 18% of the lab respondents were from the US, the popularity of these goals is nearly four times higher. This is critical because with so many different sources of allowable total errors, the calculations for Sigma-metrics are not standardized. Using a Rilibak goal to calculate Sigma-metrics will result in different design choices and implementation outcomes than if you use the CLIA goals. There have been frequent complaints that the CLIA goals are too wide, but our analyses have shown not only are the CLIA goals not the widest goals (look at Rilibak interlaboratory comparison goals if you're seeking the biggest goals), but some of the CLIA goals are equally as tight and challenging as the 2014 Ricos goals (see sodium, chloride, urea, etc.). The least-used formal set of goals are Target Measurement Uncertainty goals - most of which are unknown, non-standardized, and - you guessed it - uncertain.

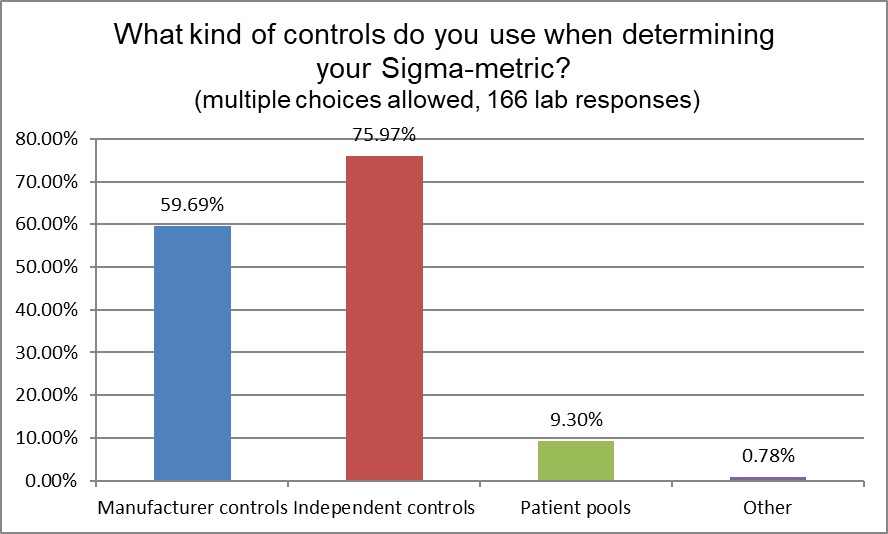

Next, we inquired into what controls laboratories were using to help them gather the data to calculate sigma metrics. Independent third party controls are the most popular choice, used by more than 75% of labs, but nearly 60% of labs are also using manufacturer controls. Given that ISO 15189 strongly recommends the use of independent controls, we see a divergence in the standards and best practices from the actual practice in the field.

What's more interesting is that when laboratories want a reliable Sigma-metric, they trust independent controls twice as much as they trust manufacturer controls. Laboratories are well aware that manufacturer controls are too often tweaked, adjusted, nudged, and otherwise manipulated so that they give a consistent "in" control message, regardless of real changes in reagent performance or impacts to patients. This is why ISO 15189 recommends using independent controls. But here we see the laboratories, too, acknowledging the unreliability of manufacturer controls. They may run a lot of manufacturer controls, but when they want a trusted assessment of performance, they want to use independent controls.

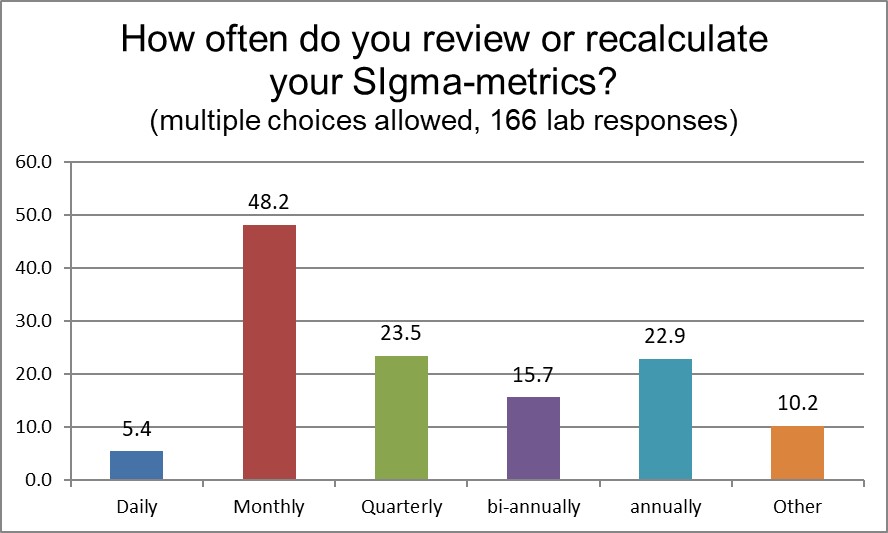

Next, we asked laboratories that use Sigma-metrics to tell us how often they calculate their metrics. In our own Sigma VP program, we advise labs to consider calculating quarterly or every six months, but the largest response is that Sigma-metrics are being calculated monthly. In some ways, this is not surprising, since labs typically perform a monthly QC review. And as long as you're checking your controls, and you have some updates to imprecision, and perhaps you see there is a new update to your peer group program, you can check your Sigma-metric. This is not an indication that Sigma-metrics change every month, indeed we've seen labs in our Sigma VP program with very stable performance over years of operation. But labs are monitoring this metric closely.

Note: very few labs monitor the Sigma-metric every day. That is definitely not recommended.

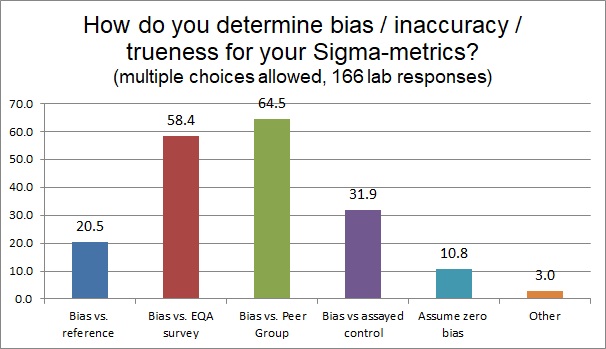

The components of a Sigma-metric calculation include not only the allowable total error, but an estimate of bias (inaccuracy or trueness) and imprecision. When it comes to assessing trueness or bias, there are many ways to do this. Ideally, one should compare your method performance against a reference method or reference material. It's impressive to see that 20% of laboratories are attempting to do that - it's usually very expensive, difficult to find, and impractical for many analytes. The most common way to determine bias is the use of the peer group program - which is a way of using a lot of control data and a lot of peer labs to determine a very relevant bias. The peer bias may not be the most traceable, but if your laboratory is significantly biased from a group of similar instruments, using similar reagent and similar controls, that's a strong sign that there is something wrong with YOUR lab. And the peer group survey is updated constantly. The second most popular way to determine bias is to use the EQA survey - the main drawback of this of course is that most EQA surveys are infrequent (sometimes as rare as only twice a year in the USA).

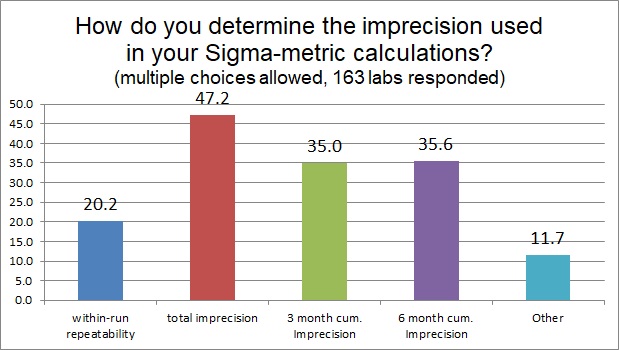

When it comes to imprecison, there is really just one way - using controls - to determine your CV%, but there is a choice of the time period over which you calculate it. Using a short-term, within-run, or repeatability estimate of imprecision is usually too optimistic, but we see that 20% of labs will do that for at least some of their tests. The most popular response is to use total imprecision, sometimes called between-run, or within-lab reproducibility (this may be typically one month of data for a chemistry test). The best practice recommendations, found in the CLSI C24 guideline, state that cumulative estimates of imprecision from 3 to 6 months are ideal. We see a significant number of labs using those in their calculations.

One of the common challenges in determining a Sigma-metric is deciding which level is the most important level for determining the quality of the method. Since performance over the working range of a test almost inevitably varies, this can mean that for each control level, there may be a different Sigma-metric. So when it comes to determining the Sigma-metric of a test, what does one lab do? Take the worst Sigma-metric? The best? An average of all levels?

The most popular response is to take the worst case scenario, the lowest Sigma-metric, therefore any QC plans you design will work for all the tests. This is in contrast to our recommendation: we advise labs to choose a single most important critical decision level and plan around that. There may be poor performance at the very low end of the range, but if no medical decisions are being made there, it's an irrelevant piece of data. So in our Sigma VP program, we provide a single decision level for all of them to benchmark the assays.

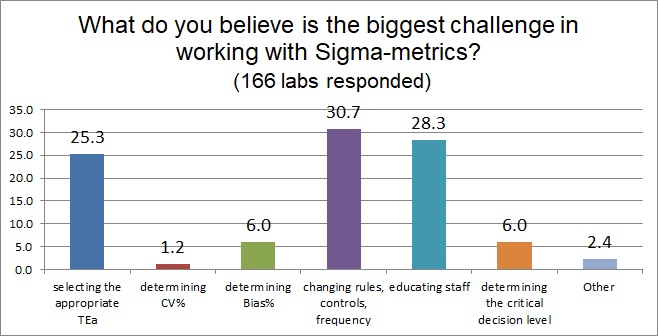

As many labs know, implementing Sigma-metrics is not without challenges. The most difficult challenge, however, is actually making use of the metric. It's easier to calculate a Sigma-metric than doing something with it. To change your QC rules and controls and even our QC frequency, and educating staff about understanding a Sigma-metric are the two biggest challenges that labs report facing. The third challenge is the choice of the allowable total error, of which we have seen lengthy debates both on this website and in the journals.

Here's where the survey gets interesting. Given the ability of Sigma-metrics to differentiate between the instrumentation offered on the market, how many labs ask for Sigma-metric data from their prospective vendors? More than half of labs ask. A quarter of them ask, another quarter of them ask but aren't provided that data by the manufacturers. Unfortunately, nearly 40% of labs don't ask for performance data from their vendors. It's like buying a car without asking its mileage per gallon - you're going to be blind to the performance and you may not get the instrument you need.

Even if every laboratory isn't asking for Sigma-metrics, there is a major impact when manufacturers don't provide that performance data. A majority of labs report that the refusal of a manufacturer to supply Sigma-metric data will somewhat or strongly influence their purchasing decision. For manufacturers seeking to promote their products, failing to provide crucial data is increasingly a sign of weakness.

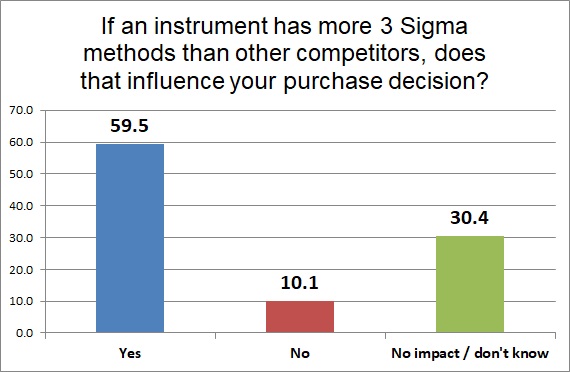

If a manufacturer does provide information, and they have more 3 sigma assays than their competitor, for nearly 60% of laboratories, that's a key factor. Once the information is obtained, knowledge of how weak an instrument is compared to other instruments is quite powerful.

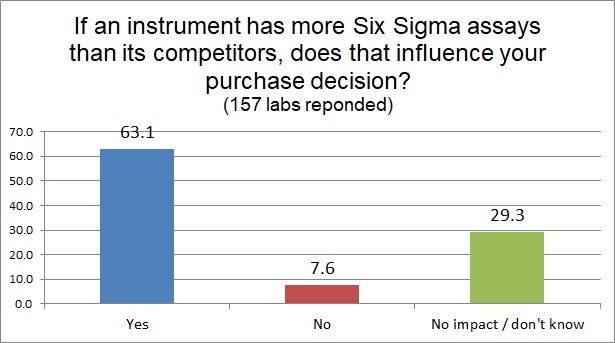

But even more impactful than knowing an instrument has more poor Sigma assays is knowing that your instrument has more Six Sigma assays than the competition, nearly 2 of 3 labs state that this information will influence their purchasing decision.

In both cases, knowing which instrument has the most worst assays and knowing which instrument has the most best assays, makes a difference in the decision-making of laboratories. Manufacturers may have been resisting providing this performance data in the past, but in the future this is no longer an option. Refusing to provide data is tantamount to an admission of weakness.

In the ongoing debate between measurement uncertainty and six sigma metrics, there's a clear verdict: Six Sigma matters twice and much to laboratories making purchasing decisions as measurement uncertainty. While measurement uncertainty is mandated for manufacturers and laboratories, they find less value in it than calculating a metric that's not mandated,

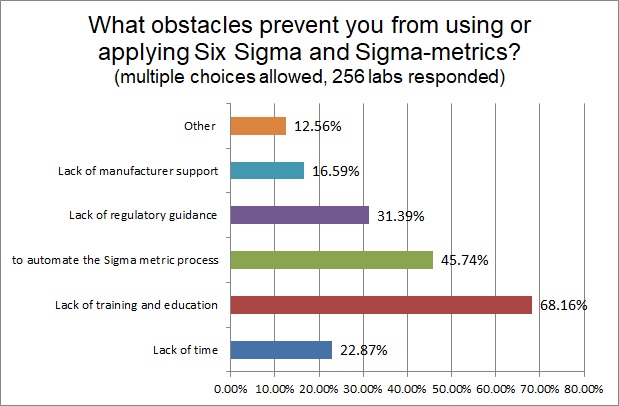

For all the labs that haven't been able to use Sigma-metrics, we asked what the obstacles were that were preventing them from adopting Six Sigma in their operations. The biggest challenge is a lack of training and education - we need to get more resources out there for labs to learn how to understand and adopt the technique. The next biggest obstacle is a lack of software to automate the Sigma-metric calculation process - something that is rapidly changing as more and more programs are adding these capabilities to their feature list. About a third of labs also would like a regulatory mandate or support - something official - that would encourage them to adopt Sigma-metrics.

Nearly half of laboratories want to calculate their own Sigma-metrics, and just under that percentage, 46% of labs would prefer to have this automated in their laboratory software.

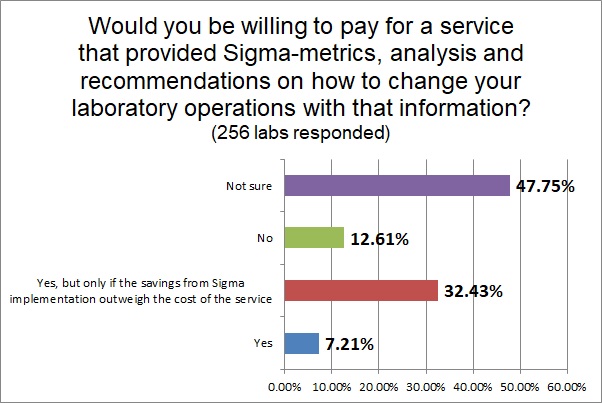

Finally, labs expressed a wary willingness to pay for a service that would calculate Sigma-metrics for them. Only a small percentage would want it outright, but a third of labs would be willing to pay if the savings of the service outweighed the cost of the service. Only a small number of labs would outright refuse a service that offered them Six Sigma metrics and savings.

So that's the state of Six Sigma in 2018. Some interesting findings, some promising avenues of progress, and definitely a roadmap for where improvements must be made in labs, in manufacturers, and in education, training, and service.

A chart-pack of these findings will be available in our Download section with all of these findings.

Thanks again to all those who took part in the survey. If we didn't have all these laboratories willing to speak their mind, we wouldn't have this valuable information to share with the world.