| Course Lesson |

Primary Materials |

| 1. Skills still needed |

Is there still a need to worry about quality? Many would have you think so. While it would be nice to live in a world where we take quality for granted, unfortunately, the world we actually live in requires we assure quality, not assume quality. Dr. Westgard details several examples of quality problems in the healthcare laboratory - and explains what is needed to fix those problems. |

Myths of Quality, which demonstrates that what you see in black and white may not in fact be true. My interest here is to challenge you to think about quality with an open mind, rather than to just assume that quality today is adequate for medical care

Good data wanted, bad data should not apply, which discusses the STARD initiative to improve the quality of publications on clinical diagnostics. Again, what you find in black and white might not be reliable unless the study is properly performed and the data properly analyzed.

|

| 2. Highlighting the Need for Quality Standards |

The quality of laboratory tests is important and will become more important in the future. But, what quality is actually required for these tests? This is not meant to be a rhetorical question. If we can’t define the quality that is needed, we won’t be able to manage quality in a quantitative way and be sure that the necessary quality is achieved in routine testing. |

The Need for Standard Processes and Standards of Quality defines a framework for quality standards, and reviews the many current "standards" that exist in the laboratory

Links to CLIA quality requirements, medical decision levels, the Ricos biologic variation database, and more

|

3. Method Validation:

What it is. How to do it |

When you look at the resuls of an experimental study - or conduct one of your own - how do you know if the study has been done correctly? Are the right statistics being used to analyze the experimental data? Are the statistical results understandable? Is the performance of this method acceptable for your own laboratory application? Those are the questions or problems that you must face in method validation studies in the real world. |

The Inner, Hidden, Deeper, Secret Meeting of Method Validation explains why we do Method Validation studies in the first place - and what the essential findings of a study should be.

|

| 4. Regulatory and Accreditation Requirements |

Do you know what rules apply to your laboratory? Here's a short list of the rules and requirements affecting the laboratory: CLIA Final Rules, Interpretive Guidelines, accreditation checklists and standards from the Joint Commission, CAP, and COLA; and CLSI, formerly known as the NCCLS, guidelines and standards. Keeping up with the rules is a fulltime job in itself. |

Method Validation Regulations details the various rules and requirements of CLIA, Joint Commission, CAP, and more.

|

| 5. The Plan for Method Selection |

How do we choose methods for our laboratory? Do we always pick the cheapest test? Or the fastest test? Or the test that has the best quality? Picking a method doesn't have to be an arbitrary process - there are rational ways to identify and prioritize the characteristics that are most important to your laboratory. |

Selecting a method to validate defines a process establishing a routine test, and identifies application, methodology, and performance characteristics that are vital to method selection.

|

| 6. The Experimental Plan |

Short-term Replication, Long-term Replication, Comparison of Methods, Interference, Recovery, the list goes on. How many studies need to be performed during method validation? And what is the best order to perform those studies? |

The Experimental Plan introduces a different perspective on method validation: linking studies to the types of error they assess. This perspective helps bring focus and order to the method validation process.

|

| 7. The Data Analysis Plan |

Random error, Systematic Error, Proportional and Constant Error, Total Error - what do these terms mean? More importantly, what do those errors mean in "the real world" laboratory? |

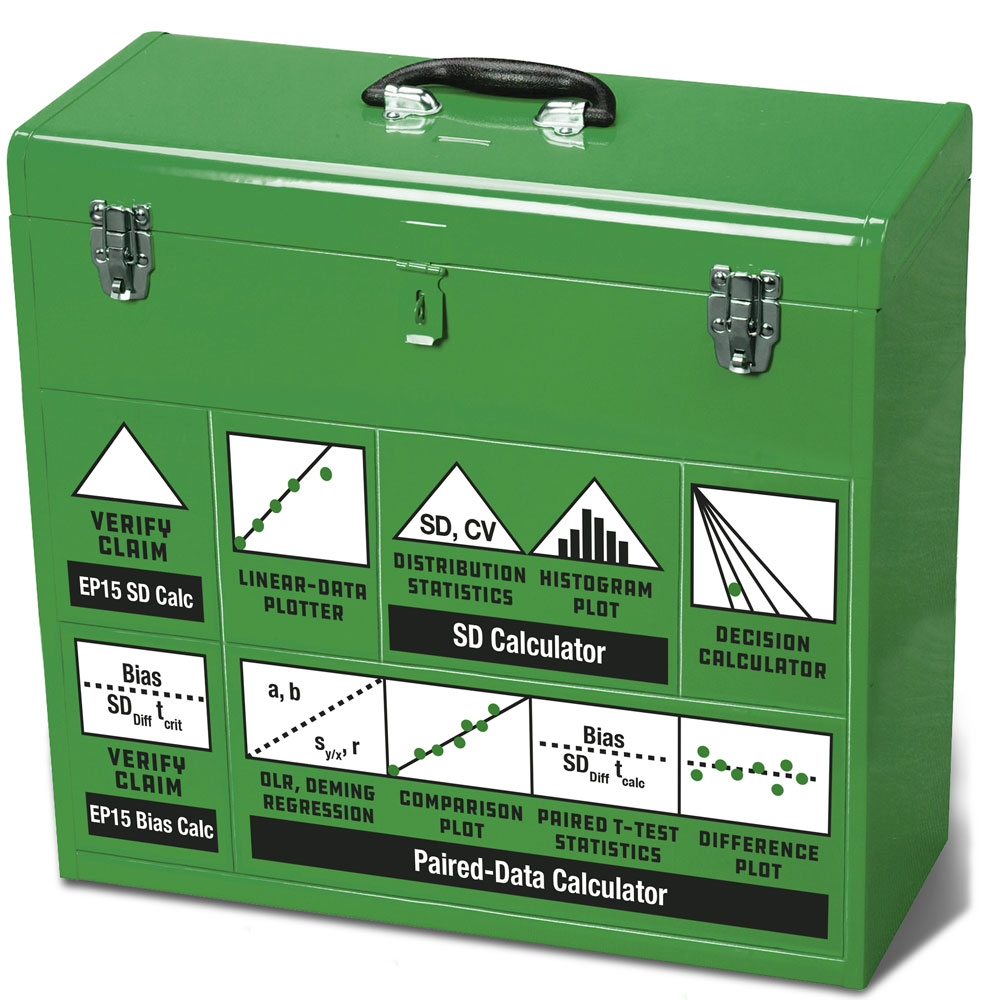

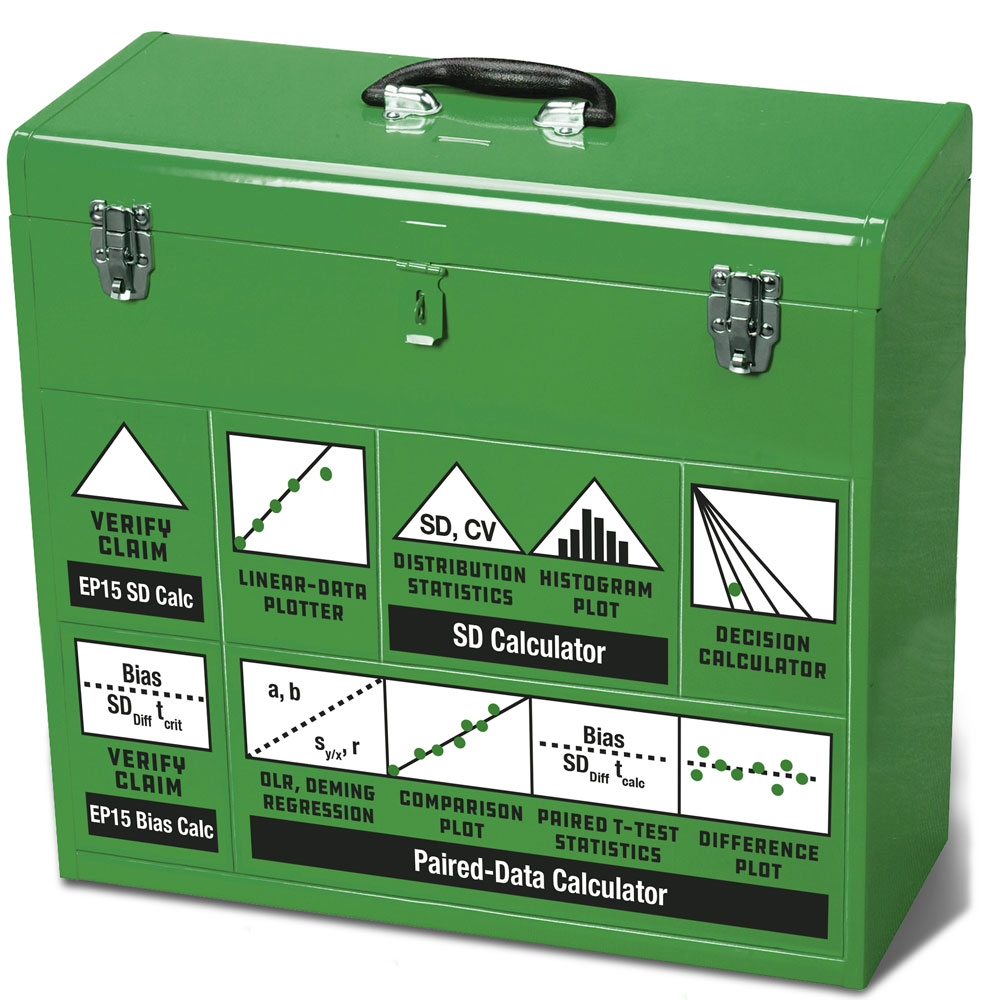

The Data Analysis Toolkit connects the dots between types of errors and laboratory results.

|

| 8. Validating Manufacturers' Claims |

Mean, standard deviation, correlation coefficient, linear regression, the statistics are daunting. What does a mean mean? What's standard about standard deviation? How do these statistics fit into the real-world laboratory? |

The Statistical Calculations provides a concise, practical guide to the real-world meaning of statistics. t-tests, F-tests, t-tables, and F-tables are also e-explained.

|

| 9. Determining Reportable Range |

Ranges are often supplied by the manufacturer. But are those results truthful? Did they use an adequate number of calibrators and number of replicates? Did they study an appropriate range? Have the data been properly analyzed and interpreted? |

The Linearity (Reportable Range) Experiment explains the nuts, bolts and proper execution of a the reportable range experiment.

|

| 10. Determining Method Imprecision |

Imprecision is a statistic that has many different estimates: short-term, within-run, long-term, between-run, between-day, day-to-day, and/or total imprecision. So how many estimates do you need to calculate? And how are those different estimates calculated? And over what time period? |

The Replication Experiment describes how to determine Precision, or imprecision, or random error - whichever term you prefer. Usually a short-time experiment is done first to estimate “within-run imprecision” and a long-time experiment is done later to provide a more realistic estimate for real operating conditions in a laboratory.

|

| 11. Determining Method Inaccuracy |

When you hear that the correlation of a method is 0.96 or 0.97 or 0.999, is that all you need to know? What about the slope and y-intercept of the regression equation? Is the number of patient specimens adequate? Is the comparative method a good or bad choice? What about Bland-Altman analysis, Deming and Passing-Bablock regression? |

The Comparison of Methods Experiment gives step-by-step instructions on selecting a test and reference method, a number of samples, as well as what data analysis to use, what graphs to plot, and what interpretation to make.

|

| 12. Estimating "Trueness" |

Healthcare laboratories must be concerned with the issue of “trueness.” In ISO terminology, trueness replaces the term accuracy or inaccuracy and becomes a synonym for systematic error or bias. In addition to the confusion from terminology, there is also a lot of confusion on how to best estimate the systematic error from the data of a comparison of methods experiment. This requires the proper use and interpretation of statistics. |

Statistical Sense, Sensitivity, and Significance reviews the statistics used in Method Validation and explains their statistical and clinical significance.

|

| 13. Judging Method Acceptability |

You've run method validation studies. You've calculated statistics. You've graphed and plotted data. But what does it all mean when you put it together? When do you know you've got a "good" method or a "bad" method? |

The Decision on Method Performance introduces the Method Decision (MEDx) chart, an easy graphical evaluation tool for method performance.

|

| 14. Verifying Reference Intervals |

Is every test the same? Can you use the same reference ranges and limits from your old method on a new method? Or will the new test change all the cutoffs you've been using? Are there ways to transfer, translate, or modify reference ranges that don't involve impractically large studies? |

Reference Interval Transference explains the process of transferring an old reference interval to a new method. Three practical options: divine judgment, verification, and estimation of reference intervals are demonstrated.

|

| 15. Performing Other Method Validation Experiments |

If you have a test that isn't cleared by the FDA, or if you modify an FDA-cleared test, do you know what additional experiments you need to perform? Do you know when and how to carry about detection limit, interference and recovery experiments? |

The Interference and Recovery experiments describes the steps of the experiments that estimate the systematic error and/or proportional systematic error caused by other materials that interfere with the method.

The Detection Limit experiment describes the different concepts and experiments that determine the Limit of Quantitation (LoQ), for instance. |

| 16. A Practical Plan for the Laboratory (and Final Exam) |

Most laboratories don't have time to vaildate methods all day. Method Validation is a process squeezed in between all the other work that needs to be done. So what's the easiest "squeeze"? What's the bare minimum you need to know and do? How do you adapt the studies to your laboratory reality? |

A Practical Plan for the Laboratory dprovides a concise summary of al the most important points of the course, highlighting key takeaways, noting useful adaptations, and explaining how to analyze studies performed by other laboratories. A useful review before the final exam.

Frequently-Asked-Questions about Method Validation goes through a number of common questions and answers

Points of Care in using statistics in Method Validation cautions readers not to make common mistakes in the use of statistics in method validation

|